library(feisr)

data("mwp", package = "feisr")

head(mwp)

#> id year lnw exp expq marry evermarry enrol yeduc age cohort

#> 1 1 1981 1.934358 1.076923 1.159763 0 1 1 11 18 1963

#> 2 1 1983 2.468140 3.019231 9.115755 0 1 1 12 20 1963

#> 3 1 1984 2.162480 4.038462 16.309174 0 1 1 12 21 1963

#> 4 1 1985 1.746280 5.076923 25.775146 0 1 0 12 22 1963

#> 5 1 1986 2.527840 6.096154 37.163090 0 1 1 13 23 1963

#> 6 1 1987 2.365361 7.500000 56.250000 0 1 1 13 24 1963

#> yeargr yeargr1 yeargr2 yeargr3 yeargr4 yeargr5

#> 1 2 0 1 0 0 0

#> 2 2 0 1 0 0 0

#> 3 2 0 1 0 0 0

#> 4 2 0 1 0 0 0

#> 5 3 0 0 1 0 0

#> 6 3 0 0 1 0 0Fixed Effects Individual Slopes using feisr

The main purpose of the package feisr is the estimation of fixed effects individual slope models and respective test statistics. The fixed effects individual slope (FEIS) estimator is a more general version of the well-known fixed effects estimator (FE), which allows to control for heterogeneous slopes in addition to time-constant heterogeneity (e.g. Brüderl and Ludwig 2015; Frees 2001; Lemieux 1998; Polachek and Kim 1994; Rüttenauer and Ludwig 2020; Wooldridge 2010). Formally, the FEIS estimator can be expressed as

\[ \begin{align} \boldsymbol{\mathbf{y}}_{i} =& \boldsymbol{\mathbf{X}}_{i}\boldsymbol{\mathbf{\beta }}+ \boldsymbol{\mathbf{W}}_i \boldsymbol{\mathbf{\alpha}}_i + \boldsymbol{\mathbf{\epsilon}}_{i}, \end{align} \] where \(\boldsymbol{\mathbf{y}}_{i}\) is \(T \times 1\), \(\boldsymbol{\mathbf{X}}_{i}\) is \(T \times K\), and \(\boldsymbol{\mathbf{\epsilon}}_{i}\) is \(T \times 1\). \(\boldsymbol{\mathbf{W}}_i\) is a \(T \times J\) matrix of slope variables, and \(\boldsymbol{\mathbf{\alpha}}_i\) a \(J \times 1\) vector of individual-specific slope parameters, for \(J\) slope parameters including a constant term. If \(\boldsymbol{\mathbf{W}}_i\) consists of a constant term only, \(\boldsymbol{\mathbf{W}}_i = \boldsymbol{\mathbf{1}}\), thus \(\boldsymbol{\mathbf{\alpha}}_i\) reduces to \(\alpha_{i1}\), and the above equation represents the well-known formula of a conventional FE model with individual fixed effects.

As with the conventional FE, FEIS can be estimated using lm() by including \(N-1\) individual-specific dummies and interaction terms of each slope variable with the \(N-1\) individual-specific dummies (\((N-1) *J\) controls). This is however highly inefficient. As with the conventional FE estimator, we can achieve the same result by running an lm() on pre-transformed data. Therefore, specify the ‘residual maker’ matrix \(\boldsymbol{\mathbf{M}}_i = \boldsymbol{\mathbf{I}}_T - \boldsymbol{\mathbf{W}}_i(\boldsymbol{\mathbf{W}}^\intercal_i \boldsymbol{\mathbf{W}}_i)^{-1}\boldsymbol{\mathbf{W}}^\intercal_i\), and estimate \[

\begin{align}

y_{it} - \hat{y}_{it} =& (\boldsymbol{\mathbf{x}}_{it} - \hat{\boldsymbol{\mathbf{x}}}_{it})\boldsymbol{\mathbf{\beta }}+ \epsilon_{it} - \hat{\epsilon}_{it}, \\

\boldsymbol{\mathbf{M}}_i \boldsymbol{\mathbf{y}}_i =& \boldsymbol{\mathbf{M}}_i \boldsymbol{\mathbf{X}}_i\boldsymbol{\mathbf{\beta }}+ \boldsymbol{\mathbf{M}}_i \boldsymbol{\mathbf{\epsilon}}_{i}, \\

\tilde{\boldsymbol{\mathbf{y}}}_{i} =& \tilde{\boldsymbol{\mathbf{X}}}_{i}\boldsymbol{\mathbf{\beta }}+ \tilde{\boldsymbol{\mathbf{\epsilon}}}_{i},

\end{align}

\] where \(\tilde{\boldsymbol{\mathbf{y}}}_{i}\), \(\tilde{\boldsymbol{\mathbf{X}}}_{i}\), and \(\tilde{\boldsymbol{\mathbf{\epsilon}}}_{i}\) are the residuals of regressing \(\boldsymbol{\mathbf{y}}_{i}\), each column-vector of \(\boldsymbol{\mathbf{X}}_{i}\), and \(\boldsymbol{\mathbf{\epsilon}}_{i}\) on \(\boldsymbol{\mathbf{W}}_i\). Intuitively, we (1) estimate the individual-specific predicted values for the dependent variable and each covariate based on an individual intercept and the additional slope variables of \(\boldsymbol{\mathbf{W}}_i\), (2) ‘detrend’ the original data by these individual-specific predicted values, and (3) run an OLS model on the residual data. Similarly, we can estimate a correlated random effects (CRE) model (Chamberlain 1982; Mundlak 1978; Wooldridge 2010) including the individual specific predictions \(\hat{\boldsymbol{\mathbf{X}}}_{i}\) to obtain the FEIS estimator: \[

\begin{align}

\boldsymbol{\mathbf{y}}_{i} =& \boldsymbol{\mathbf{X}}_{i}\boldsymbol{\mathbf{\beta }}+ \hat{\boldsymbol{\mathbf{X}}}_{i}\boldsymbol{\mathbf{\rho }}+ \boldsymbol{\mathbf{\epsilon}}_{i}.

\end{align}

\] We use this transformation of the FEIS estimator to derive a Hausman-like Artificial Regression Test (ART) for hetergeneous slopes in panel or otherwise nested models.

The main functions of the feisr package are:

feis(): Estimates fixed effects individual slope estimators by applying linearlmmodels to ‘detrended’ data.feistest(): Estimates a regression-based Hausman test for fixed effects individual slope models.bsfeistest(): Estimates a bootstrapped Hausman test for fixed effects individual slope models.slopes(): Extracts the individual slopes fromfeisobjects.

The functions included in the R package feisr are also available in the xtfeis ado for Stata. The package plm (Croissant and Millo 2008, 2019) provides estimation of related models, as for instance the mean group (MG) or common correlated effects mean groups (CCEMG) estimator via pmg() function, and the estimator of models with variable coefficients via pvcm().

Estimating feis models

We demonstrate the most important functions of the feisr package by conducting an exemplary replication of the results presented in Ludwig and Brüderl (2018). We therefore use the mwp panel data, containing information on wages and family status of 268 men. This is a random sample drawn from the National Longitudinal Survey of Youth (Bureau of Labor Statistics 2014), and more details on the selection of observations and variable construction can be found in Ludwig and Brüderl (2018). To load the package and data use:

The data set contains a unique person identifier (id) and survey year indicator (year). Furthermore, we have information about the log hourly wage rate (lnwage), work experience (exp) and its square (expq), family status (marry), enrollment in current education (enrol), years of formal education education (yeduc), age (age), birth cohort (cohort), and a grouped year indicator (yeargr).

To illustrate the usage of the feisr package, we exemplary investigate the ‘marriage wage premium’: we analyze whether marriage leads to an increase in the hourly wage for men. We use the function feis to estimate fixed effects individual slope models to control for the hypothesis that those men who are more likely to marry or marry earlier, also have a steeper wage growth over time. Similar to the plm function, feis requires to indicate a unique person / group identifier. To include individual-specific slopes, feis uses two-part formulas (expr | slope_expr), where slope_expr gives the expression for modelling the individual slopes.

In the most simple setting, we just regress the wage (lnw) on the dichotomous marriage indicator (marry) and the control variable year. Instead of controlling for a common linear time trend (lnw ~ marry + year), we use year as slope variable in feis() and thus allow each individual in our dataset to have their own linear time trend (lnw ~ marry | year):

wages.feis0 <- feis(lnw ~ marry | year, data = mwp, id = "id")

summary(wages.feis0)

#>

#>

#> Call:

#> feis(formula = lnw ~ marry | year, data = mwp, id = "id")

#>

#>

#> Residuals:

#> Min. 1st Qu. Median 3rd Qu. Max.

#> -2.4525833 -0.1252836 0.0086407 0.1443467 1.9814428

#>

#> Coefficients:

#> Estimate Std. Error t-value Pr(>|t|)

#> marry 0.067184 0.023025 2.9178 0.003555 **

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Normal standard errors

#> Slope parameters: year

#> Total Sum of Squares: 247.46

#> Residual Sum of Squares: 246.64

#> R-Squared: 0.0033108

#> Adj. R-Squared: 0.0029892The summary() function prints the model output with the conventional information. The result of this simple model suggests that marriage increases the wage above what we would have expected based on each individual’s linear time trend. Note however that the assumption of a linear time trend or a linear effect of other control variables might be spurious. In our example, it is highly unlikely that wages are linearly increasing with time / age. Thus, more complex transformations of the slope variable(s) might be necessary in many applications (see e.g. Kneip and Bauer 2009; Wolfers 2006 for further examples).

An advantage of FEIS is that it allows to use any observed variable to be specified as individual-specific covariate. The formula in feis() also allows ‘as is’ transformations of original variables using I(). To replicate the analysis of Ludwig and Brüderl (2018), we use work experience (exp) and squared work experience as the slope variables:

wages.feis <- feis(lnw ~ marry + enrol + yeduc + as.factor(yeargr)

| exp + I(exp^2), data = mwp, id = "id")

summary(wages.feis)

#>

#>

#> Call:

#> feis(formula = lnw ~ marry + enrol + yeduc + as.factor(yeargr) |

#> exp + I(exp^2), data = mwp, id = "id")

#>

#>

#> Residuals:

#> Min. 1st Qu. Median 3rd Qu. Max.

#> -2.0790815 -0.1050450 0.0046876 0.1112708 1.9412090

#>

#> Coefficients:

#> Estimate Std. Error t-value Pr(>|t|)

#> marry 0.0134582 0.0273006 0.4930 0.6221

#> enrol -0.1181725 0.0234275 -5.0442 4.913e-07 ***

#> yeduc -0.0020607 0.0137673 -0.1497 0.8810

#> as.factor(yeargr)2 -0.0464504 0.0352096 -1.3193 0.1872

#> as.factor(yeargr)3 -0.0189333 0.0510825 -0.3706 0.7109

#> as.factor(yeargr)4 -0.1361305 0.0616378 -2.2086 0.0273 *

#> as.factor(yeargr)5 -0.1868589 0.0769889 -2.4271 0.0153 *

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Normal standard errors

#> Slope parameters: exp, I(exp^2)

#> Total Sum of Squares: 190.33

#> Residual Sum of Squares: 185.64

#> R-Squared: 0.024626

#> Adj. R-Squared: 0.022419In the example above, we can see that marriage has only a small effect of 0.013, and is not statistically significant. Note that the (first stage) slope regression, ‘detrending’ the data, always includes an intercept to control for constant person-specific differences. The regression on the detrended data, by default, does not contain an overal intercept (intercept = FALSE). By default, the feis() functions returns conventional standard errors for homoscedastic errors (the scaled variance-covariance matrix is attached to the output objects and can be extracted by vcov()). Using the option robust = TRUE instead returns panel-robust standard errors (e.g. Arellano 1993; Berger, Graham, and Zeileis 2017; Wooldridge 2010):

To compare the results with a conventional fixed effects and random effects models, we use the well-known plm (Croissant and Millo 2008) package and estimate a within and a random effects model. Note that the feis() function would produce identical results to a plm(..., model = "whitin", effect = "individual") model if specified with an intercept in the slope variables only (without any additional slope variables). However, for this case, we recommend using the well-established plm package instead.

library(plm)

wages.fe <- plm(lnw ~ marry + enrol + yeduc + as.factor(yeargr)

+ exp + I(exp^2), data = mwp, index = c("id", "year"),

model = "within", effect = "individual")

wages.re <- plm(lnw ~ marry + enrol + yeduc + as.factor(yeargr)

+ exp + I(exp^2), data = mwp, index = c("id", "year"),

model = "random", effect = "individual")The difference between the three models produced above can be visualized using the screenreg() and texreg() functions of the texreg package (Leifeld 2013). Since version 1.37.1, the texreg packages comes with an extract method for feis models.

library(texreg)

#> Warning: package 'texreg' was built under R version 4.4.2

#> Version: 1.39.4

#> Date: 2024-07-23

#> Author: Philip Leifeld (University of Manchester)

#>

#> Consider submitting praise using the praise or praise_interactive functions.

#> Please cite the JSS article in your publications -- see citation("texreg").

screenreg(list(wages.feis, wages.feis.rob, wages.fe, wages.re), digits = 3,

custom.model.names = c("FEIS", "FEIS robust", "FE", "RE"))

#>

#> ==========================================================================

#> FEIS FEIS robust FE RE

#> --------------------------------------------------------------------------

#> marry 0.013 0.013 0.078 *** 0.091 ***

#> (0.027) (0.029) (0.022) (0.020)

#> enrol -0.118 *** -0.118 *** -0.208 *** -0.202 ***

#> (0.023) (0.024) (0.023) (0.021)

#> yeduc -0.002 -0.002 0.056 *** 0.063 ***

#> (0.014) (0.018) (0.007) (0.005)

#> as.factor(yeargr)2 -0.046 -0.046 -0.141 *** -0.157 ***

#> (0.035) (0.038) (0.030) (0.029)

#> as.factor(yeargr)3 -0.019 -0.019 -0.165 *** -0.197 ***

#> (0.051) (0.052) (0.047) (0.044)

#> as.factor(yeargr)4 -0.136 * -0.136 * -0.276 *** -0.316 ***

#> (0.062) (0.062) (0.062) (0.057)

#> as.factor(yeargr)5 -0.187 * -0.187 * -0.298 *** -0.349 ***

#> (0.077) (0.074) (0.079) (0.073)

#> exp 0.073 *** 0.074 ***

#> (0.009) (0.008)

#> exp^2 -0.001 *** -0.001 ***

#> (0.000) (0.000)

#> (Intercept) 1.562 ***

#> (0.071)

#> --------------------------------------------------------------------------

#> R^2 0.025 0.025 0.414 0.440

#> Adj. R^2 0.022 0.022 0.357 0.439

#> Num. obs. 3100 3100 3100 3100

#> Num. groups: id 268 268

#> RMSE 0.285 0.285

#> s_idios 0.341

#> s_id 0.279

#> ==========================================================================

#> *** p < 0.001; ** p < 0.01; * p < 0.05The most striking difference here is the effect of marriage on wage. The FE returns a highly significant effect of marriage on the logarithmic wage of 0.078, which exceeds the prediction of the FEIS by 0.065. Consequently, according to the FE, we would conclude that there really is a ‘marital wage premium’ for men. The random effects model produces results, which are relatively similar to the results of the conventional FE model. However, the FEIS revises this conclusion by showing that a substantial part of the effect stems from the fact that men with a steeper wage growth tend to marry at higher rates (those men with a stronger effect of experience on wage also exhibit a stronger effect of experience on marriage). In consequence, we can conclude that the ‘marital wage premium’ mainly stems from selection on growth rather than from a causal effect of marriage on wage. The models also differ in terms of explained variance: \(R^2\) of 0.414 in the FE compared to 0.025 in the FEIS model. However, this seems quite plausible, given that we have ‘discarded’ all the variance in wages, which can be explained by individual work experience in the FEIS model.

Hausman-like specification tests

The example above illustrates how heterogeneous growth curves related to the covariates can drastically influence the conclusions drawn in conventional fixed effects models. For applied research, we thus propose to test for the presence of heterogeneous slopes when using panel models. Therefore, the function feistest() provides a Hausman-like artificial regression test (as discussed in Rüttenauer and Ludwig 2020), which estimates the Mundlak specification (Mundlak 1978) of the FEIS model and performs a Wald \(\chi^2\) test on the coefficients of the individual-specific predicted values using the aod package (Lesnoff and Lancelot 2012). The test can be performed on the FEIS model estimated by feis(), and no further model specifications are necessary. By default, the test is jointly performed on all covariates given on the right side of the formula. If required, the test can also be restricted to specified covariates only using the option terms (e.g. feistest(wages.feis, terms = c("marry", "enrol"))). With the option type = "all", the function feistest() provides a test of FEIS against FE, the regression-based version of the well-known Hausman test comparing FE against RE (Hausman 1978; Wooldridge 2010), and a third test comparing the FEIS directly against the RE model. In the example below, we use cluster-robust standard errors for the artificial regression test. A summary method is provided in the package:

ht <- feistest(wages.feis, robust = TRUE, type = "all")

summary(ht)

#>

#>

#> Call:

#> feis(formula = lnw ~ marry + enrol + yeduc + as.factor(yeargr) |

#> exp + I(exp^2), data = mwp, id = "id")

#>

#> Robust Artificial Regression Test

#>

#> FEIS vs. FE:

#> ------------

#> H0: FEIS and FE estimates consistent

#> Alternative H1: FE inconsistent

#> Model constraints: marry_hat, enrol_hat, yeduc_hat, as_factor_yeargr_2_hat,

#> as_factor_yeargr_3_hat, as_factor_yeargr_4_hat, as_factor_yeargr_5_hat = 0

#>

#> Chi-squared test:

#> Chisq = 49.558, df = 7, P(> X2) = 1.7639e-08

#>

#>

#> FE vs. RE:

#> ------------

#> H0: FE and RE estimates consistent

#> Alternative H1: RE inconsistent

#> Model constraints: marry_mean, enrol_mean, yeduc_mean, as_factor_yeargr_2_mean,

#> as_factor_yeargr_3_mean, as_factor_yeargr_4_mean, as_factor_yeargr_5_mean,

#> exp_mean, exp_2_mean = 0

#>

#> Chi-squared test:

#> Chisq = 13.087, df = 9, P(> X2) = 0.15872

#>

#>

#> FEIS vs. RE:

#> ------------

#> H0: FEIS and RE estimates consistent

#> Alternative H1: RE inconsistent

#> Model constraints: marry_hat, enrol_hat, yeduc_hat, as_factor_yeargr_2_hat,

#> as_factor_yeargr_3_hat, as_factor_yeargr_4_hat, as_factor_yeargr_5_hat = 0

#>

#> Chi-squared test:

#> Chisq = 55.231, df = 7, P(> X2) = 1.342e-09Alternatively, we can also use a bootstrapped Hausman test to compare the FEIS, the FE, and the RE model. The function bsfeistest() performs bootstrapping by pairs cluster resampling from the original dataset with replacement, meaning that we run a feis, a plm within model, and a plm random model for each rep random sample. Note that resampling is perfomed on the cluster ids. Thus, for unbalanced samples, only the number of clusters is identical in each replication while the total number of observations can vary. This method is analogue to the ‘bootstrap-se’ method described in Cameron, Gelbach, and Miller (2008), allowing us to receive an empirical estimate of the variance-covariance matrix \({\boldsymbol{\mathbf{V}}}_{\hat{\boldsymbol{\mathbf{\beta}}}_1 - \hat{\boldsymbol{\mathbf{\beta}}}_0}\) and to perform the original version of the Hausman test (Hausman 1978).

bsht <- bsfeistest(wages.feis, type = "all", rep = 100, seed = 91020104)

#> Simulations completed (by 10):

#> +++++++++ 100

summary(bsht)

#>

#>

#> Call:

#> feis(formula = lnw ~ marry + enrol + yeduc + as.factor(yeargr) |

#> exp + I(exp^2), data = mwp, id = "id")

#>

#> Bootstrapped Hausman Test

#> Repetitions: 100

#>

#> FEIS vs. FE:

#> ------------

#> H0: FEIS and FE estimates consistent

#> Alternative H1: FE inconsistent

#> Model constraints: beta_FEIS = beta_FE for: marry, enrol, yeduc,

#> as_factor_yeargr_2, as_factor_yeargr_3, as_factor_yeargr_4, as_factor_yeargr_5

#>

#>

#> Chi-squared test:

#> Chisq = 48.112, df = 7, P(> X2) = 3.3855e-08

#>

#>

#> FE vs. RE:

#> ------------

#> H0: FE and RE estimates consistent

#> Alternative H1: RE inconsistent

#> Model constraints: beta_FE = beta_RE for: marry, enrol, yeduc,

#> as_factor_yeargr_2, as_factor_yeargr_3, as_factor_yeargr_4, as_factor_yeargr_5,

#> exp, exp_2

#>

#> Chi-squared test:

#> Chisq = 13.458, df = 9, P(> X2) = 0.14297

#>

#>

#> FEIS vs. RE:

#> ------------

#> H0: FEIS and RE estimates consistent

#> Alternative H1: RE inconsistent

#> Model constraints: beta_FEIS = beta_RE for: marry, enrol, yeduc,

#> as_factor_yeargr_2, as_factor_yeargr_3, as_factor_yeargr_4, as_factor_yeargr_5

#>

#>

#> Chi-squared test:

#> Chisq = 56.537, df = 7, P(> X2) = 7.388e-10The first test comparing FEIS against FE offers a clear rejection of the null- hypothesis that FE is consistent. A highly significant \(\chi^2\) of 49.558 (48.112 in the bootstrapped version) indicates that estimates of the conventional FE are inconsistent because of heterogeneous slopes. Thus, we should use FEIS instead of FE. Interestingly, the second test of FE against RE gives a non-significant \(\chi^2\) of 13.087 with p=0.159 (or 13.458 with p=0.143 respectively), indicating that we could use an RE model instead of a conventional FE model. This result is in line with the fact that RE and FE produce relatively similar coefficients in our example.

Thus, when RE is the preferred model, we highly recommend to test the RE against the FE and against the FEIS model. The third test statistic of feistest() and bsfeistest() offers a direct comparison of FEIS against RE: both versions – artificial regression based and bootstrapped – show a highly significant \(\chi^2\) of 55.231 with p=0 (or 56.537 with p=0 respectively), thereby indicating that we should reject the null-hypothesis of consistent estimates in the RE model. In sum, when ignoring the possibility of heterogeneous slopes, we would lean towards using a conventional RE model, thereby relying on an effect of marriage which equals 7 times the effect obtained in a model accounting for individual-specific slopes.

Extracting individual slopes

Occasionally, estimates of individual slopes might be of interest in themselves. For example, we could compute the average conditional slopes over the sample of \(i\) as estimates for \(E(\boldsymbol{\mathbf{\alpha}}_{i2})\). Similarly, we might compare estimated slopes across treatment groups. Using the function slopes() on an object of class “feis” returns the individual slope estimates for each id:

alpha <- slopes(wages.feis)

head(alpha)

#> (Intercept) exp I(exp^2)

#> 1 1.888163 0.151121316 -0.004378886

#> 2 2.260406 0.242650679 -0.020343662

#> 3 2.624885 -0.005875327 0.001429338

#> 4 2.654415 0.009721989 0.002030869

#> 5 2.284673 0.347351383 -0.011189687

#> 6 2.199719 0.197108584 -0.007619929As shown above, we receive a matrix which contains the individual ids in the rownames. For each individual, we get an intercept (the individual fixed effects as in a conventional FE), and a coefficient for each slope variable (in our case exp and squared exp). We can use the rownames to merge the individual slopes to the original data.

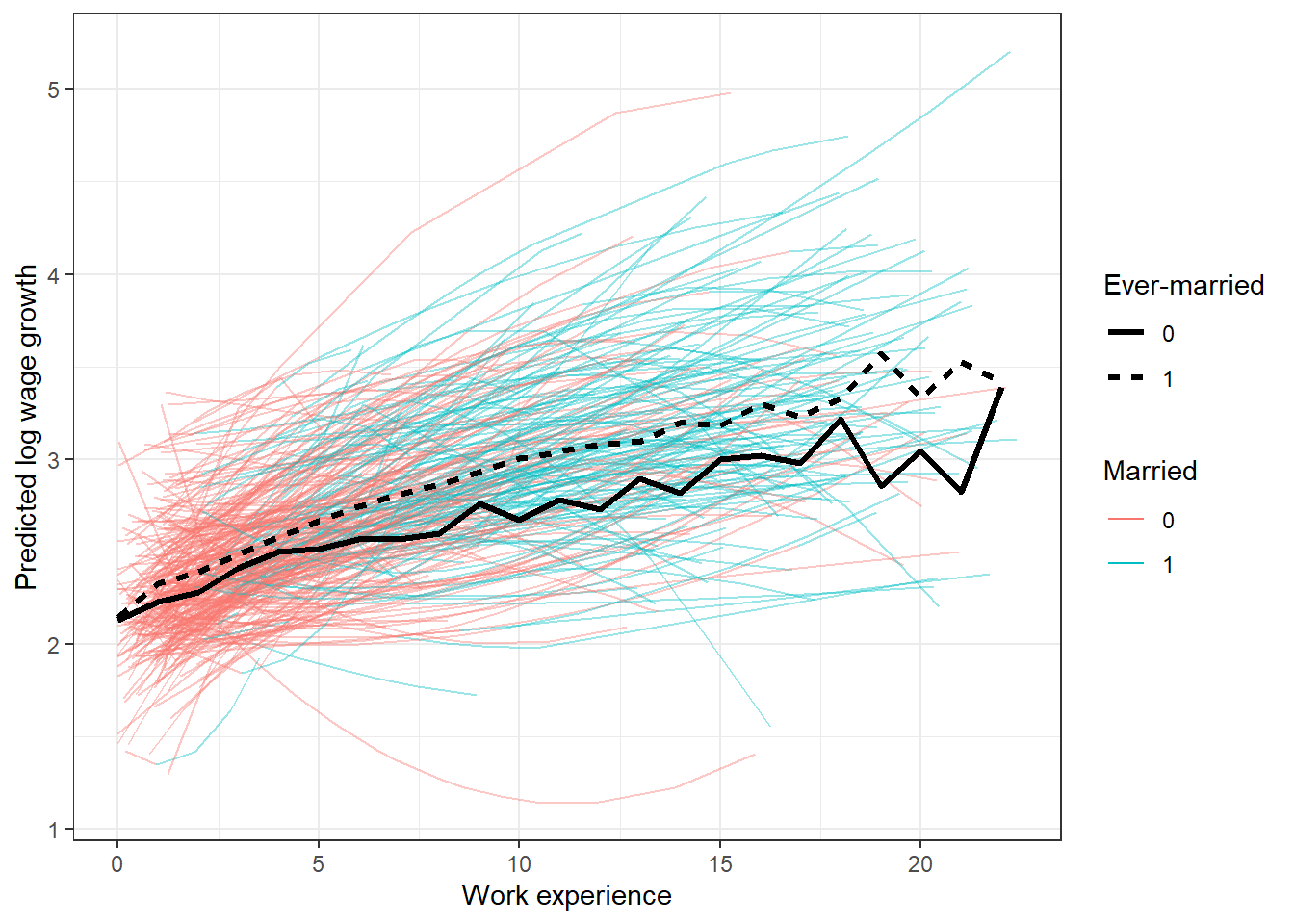

Thus, we can also use the individual slope parameters for further transformation or analysis. As an example, we plot the predicted ln wage trends by marriage status using ggplot2 (Wickham 2016), and also add the average trends by marriage group (ever married vs. never married).

### Individual predicted trend of lnw (based on slopes)

mwp$pred <- mwp$slp_.Intercept. + mwp$exp * mwp$slp_exp + mwp$exp^2 * mwp$slp_I.exp.2.

### Average value by evermarry and exp bins

mwp$exp_gr <- round(mwp$exp, 0)

aggr <- aggregate(mwp$pred, by = list(exp = mwp$exp_gr, evermarry = mwp$evermarry), mean)

### Plot

library(ggplot2)

#> Warning: package 'ggplot2' was built under R version 4.4.3

zp1 <- ggplot(data = mwp, aes(x = exp, y = pred)) +

geom_line(aes(group = id, col = as.factor(marry)),

linetype = "solid", lwd = 0.5, alpha = 0.4) +

geom_line(data = aggr, aes(y = x, linetype = as.factor(evermarry)),

alpha = 1, size = 1.2) +

labs(color = "Married", linetype = "Ever-married") +

guides(colour = guide_legend(override.aes = list(alpha = 1))) +

labs(y = "Predicted log wage growth", x = "Work experience") +

theme_bw()

#> Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

#> ℹ Please use `linewidth` instead.

zp1

This example shows that the data exhibits strong heterogeneity in the predicted wage growth over experience. It also shows, that even conditional on the overall marriage effect – marry is controlled for in the model from which the slopes are extracted – ever-married individuals, on average, have a stronger wage growth over the years than never-married men. Furthermore, the average trends already start to diverge when most respondents are still unmarried. This leads to a violation of the parallel trends assumption and biased point estimates in conventional FE models.

For more information on FEIS models, the respective test statistics, and applied examples, see also Rüttenauer and Ludwig (2020).